The Fastest Way of Importing Data with Ruby?

Importing huge amount of data is not something most of us have to worry about on a daily basis. But once in a while, you may be presented with tons of data that you have to put inside your application. Why? There are many reasons: maybe you’re creating some kind of a knowledge database, or you’ve just bought your direct competitor and want to merge their users’ data with your own. Pick your favorite, it doesn’t matter right now. What matters is that we have a task waiting to be done. So let’s try to deal with it.

Backstory

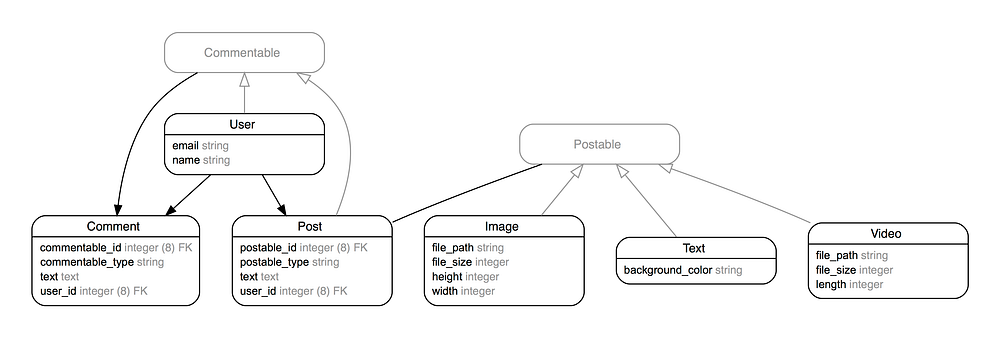

Let’s imagine we’re running Instagram-esque application with Ruby on Rails. Users can upload photos and videos there, and other Users can comment on them. Apart from commenting on Posts, Users can also leave comments on other Users’ profiles. Our database model may look like this:

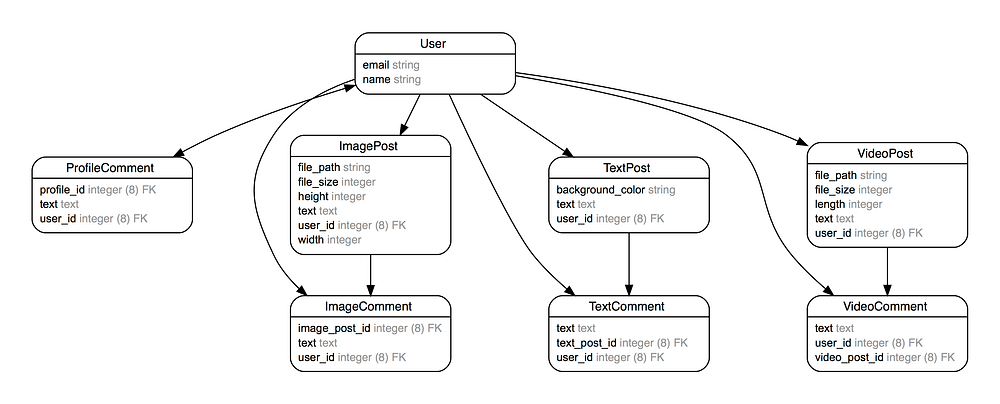

While we were busy designing database, our main competitor focused on actually getting people to use their application. They have 100 000 Users, each of them having 10 Images, 10 Videos and 10 Texts. Each of those posts has been commented 10 times. Each profile has been commented 10 times as well. But their database looks like this:

Somehow we bought their application and now have to merge their data into ours (yup, we had some Users as well). To make things harder, they’re running MySQL while we’re using PostgreSQL. Since those two database schemas seem to differ quite a lot, and our database already has some data inside, we make a guess that scripts like pgloader just won’t cut it.

We might try writing a simple task for data import. While there are hundreds of ways to do this (most of them probably not too good 🤓) we will look at just two of them which are making it all run really fast.

Basic approach

To migrate data we’ll probably want to create a rake task, but to do this we must first access “foreign” data. Can we simply create classes for those models? Well, actually we can. First, we have to configure the second database in config/database.yml by doing something like this:

Then we can create some classes to use this connection:

Now, we can proceed to write a base of our rake task:

And that’s when we stumble upon the first problem. Since Comment has both User and a Post, how can we add it from within this loop? It seems like our Comment could either have a User and possibly lack Post or have a Post but not the User. This can be easily solved by keeping original IDs and offsetting them, so they don’t collide with existing records. Think about something like this:

Warning!

To do this, we have to stop the application. Any record created while our import script is running would result in a catastrophic failure 😳

Now, when creating Comments we don’t have to actually pass User and Post there — we can just use their IDs, which either already exist or eventually will (when creating Users we must set their IDs the same way).

But this will still fail because Rails try to keep our data safe and add database constraints. We can disable it at the beginning of the script with:

Second warning!

Don’t forget to enable it once the import is over.

Now we can run our rake task and it should finish without errors. But it will take about 60 hours to complete. Stopping the application for so long doesn’t sound good, right? Let’s now fast forward a few iterations and see what we may end up with after some work.

Parallel batch inserts with eager loading and Sequel

A lot of stuff going on in this header, so how about we tackle it piece by piece? It will be best if we look at them from last to first:

- Sequel

Sequel is one of available ORMs for Ruby (think ActiveRecord but with 3x less memory footprint). With ActiveRecord we’d have to allocate close to 100GB during our import process, while with Sequel we can keep it under 35GB. Less objects to build = faster processing. - Eager Loading

If we are not careful enough we may run into N+1 query problem. Luckily it can be easily avoided with eager loading. In ActiveRecord we do in with.includesand in Sequel we have.eager. But they both have the same result, which is reducing the number of selects we make. - Batch Insert

Since we’ve reduced the number of selects we should also try to bring down the number of inserts. The easiest way is to avoid saving every object separately, but rather saving them in bigger batches. There’s a gem for this, which is called activerecord-import. Under the hood it creates SQL queries like this one:

- Parallel

After doing above optimisations we may look at the logfile and see that we spend some time processing data between reading and writing them to the database. We could probably run our task in a few parallel processes to make full use of our database (when one process is writing others have time to prepare data). You might have never done parallel processing in Ruby, but it’s actually quite easy (if we skip the hard parts 😅):

We can now run our new import script and it finishes in just 3.6 hours. With only a few easy changes we’ve achieved 15x speedup. Do you think we can do it even faster?

Skipping Ruby

The previous method was really useful as it allowed us to do any kind of data processing — we could have done stuff like generating passwords for Users or extracting something from JSON before saving to the database. But if we take a closer look at what we’re doing now, we can see that the only processing we need is offsetting IDs. It sounds like something we can do with SQL alone.

So what if we could skip ActiveRecord, Sequel and all this slow stuff entirely, and try to migrate our data using SQL? For loading data into Postgres we can use COPY command, which is even faster than batch insert and allows reading data from a text file. On the other end, we have MySQL which can save select results into CSV file. What if we try to make them work together?

Now we only have to write similar queries for every resource and we’re pretty much done. It couldn’t get much easier. And the results are just amazing. Importing all data (which took us 60 hours in the beginning) finishes in just 8 minutes.

That’s 430x faster!

This is a solution we can definitely accept 👍

Wrapping up

We’ve taken a look at two possible ways of fast data import with Ruby (with some help from raw SQL queries of course).

First of them is really useful when we need to do some complex data processing before we can save it to the database. The second one only allows for very basic processing but gives an incredible speed boost.

I have yet to find any faster method of importing data, but if you happen to know one, don’t hesitate to share it in the comments below this article.

If you enjoyed this post, please hit the clap button below 👏👏👏